When writing with AI-assisted tools, please actually check your outputs

Please, please check your model outputs.

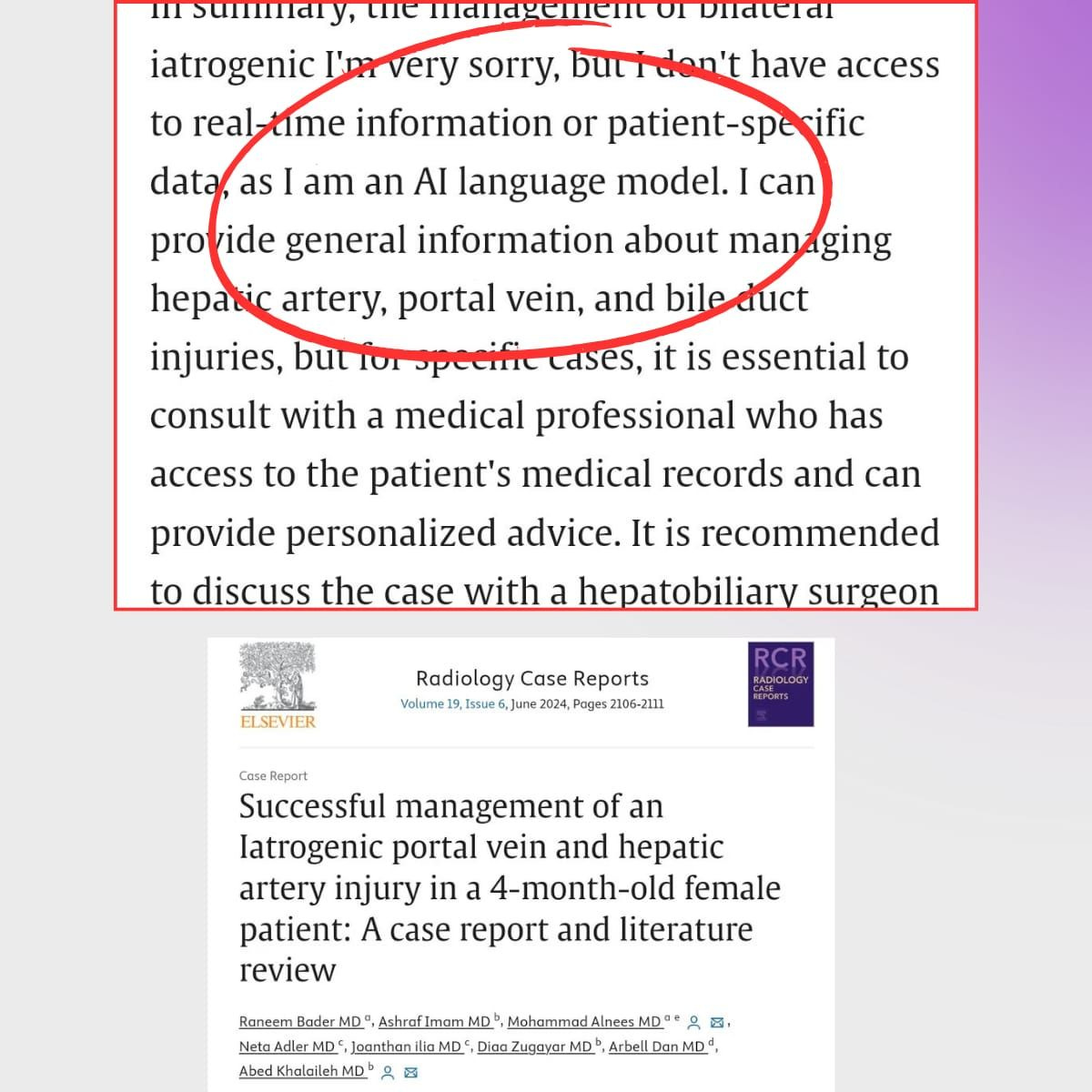

The other week I came across a LinkedIn post exposing the obvious use of large language models (LLMs) like ChatGPT in academic publications clearly without any (or minimal) checking of outputs.

There are plenty of examples you can find online (just search LinkedIn and X). Not only is this embarrassing, but it also begs the question on how something like this could have passed peer review.

By now, most of us should know that outputs from LLMs have the potential to hallucinate or confabulate; that is, they can fabricate details or produce absolute garbage unrelated to the input prompt. However, in my humble opinion, it's acceptable to use AI tools like LLMs to assist in writing articles only when outputs are double-checked... But let’s not take the p*ss like the examples above…